Why is Annotation Important in Autonomous Vehicles?

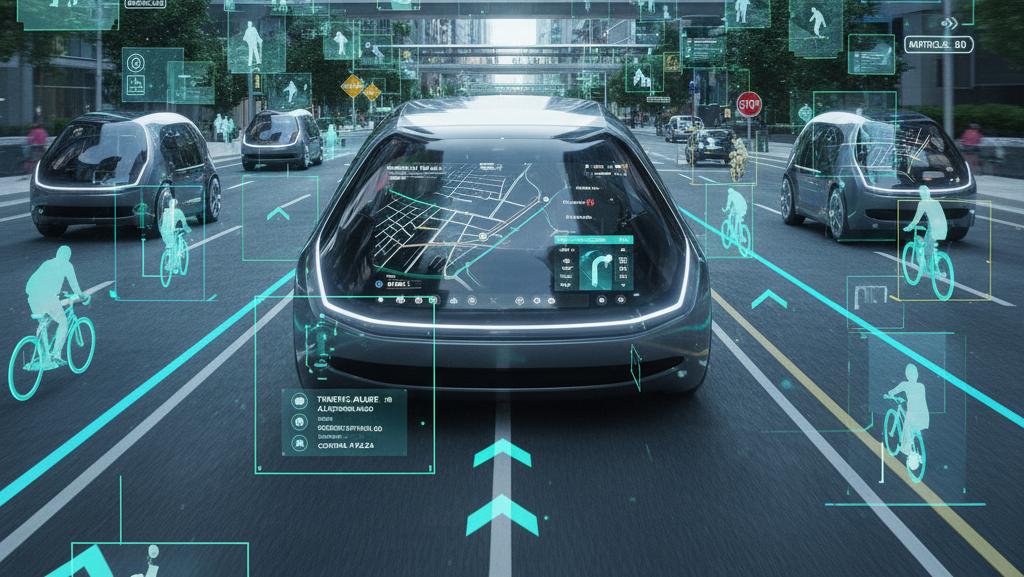

Autonomous vehicles depend on AI models that interpret complex real-world environments using inputs from cameras, LiDAR, RADAR, and sensors. To teach these models how to detect, classify, and respond to various objects, the training data must be meticulously labeled.

Without high-quality annotation, the system cannot distinguish between roads, vehicles, traffic lights, pedestrians, or obstacles — leading to poor navigation and safety risks.

Annotation ensures:

- Accurate object detection (vehicles, pedestrians, animals, traffic signs).

- Reliable lane and road boundary recognition.

- Real-time decision-making for navigation and collision avoidance.

- Better sensor fusion from multiple data sources (LiDAR, RADAR, cameras).

Types of Autonomous Vehicle Annotation

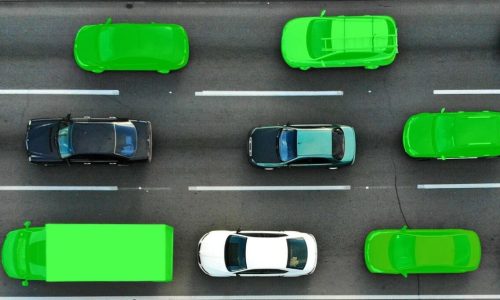

Bounding Box Annotation: Used to identify and locate objects like cars, cyclists, or pedestrians in images or video frames.

Semantic Segmentation: Provides pixel-level classification of scenes — roads, lanes, sidewalks, vegetation, vehicles, and sky — crucial for understanding the driving environment.

Polyline Annotation: Marks lane lines, road edges, and drivable areas, enabling precise path planning and lane-keeping assistance.

3D Cuboid Annotation: Applied to LiDAR and RADAR data for spatial object detection and depth estimation, helping vehicles understand distances and object dimensions.

Keypoint Annotation: Identifies critical points such as pedestrian joints or vehicle corners, aiding motion tracking and behavior prediction.

Sensor Fusion Annotation: Combines visual, LiDAR, and RADAR data annotations for a unified 3D perception model — essential for reliable autonomous navigation.